Managing, the next challenge for AI

Most people experience AI today as a kind of assistant or co-pilot. You give it a prompt, it produces an answer — often useful, sometimes brilliant, sometimes wrong. But real organizations don’t just need tasks executed. They need projects completed.

This is where the idea of a Manager Agent comes in: an AI system designed to plan, assign, monitor, adapt, and communicate in human-AI teams. In other words, not just an intelligent worker, but an intelligent manager.

The Manager Agent Challenge, recently published by the team from DeepFlow at the 2025 International Conference on Distributed Artificial Intelligence in London, is a new research benchmark, designed to test exactly this. The paper is now available on arXiv.

Reasoning Isn’t Enough

One of the most striking findings from the paper is that better reasoning alone doesn’t solve the management problem.

Having no manager is preferable to having a bad one!

Take a look at Figure 2 from the paper:

.png)

The chart shows how different Manager Agents performed across goals, constraints, and time. Agents with strong reasoning (think “Chain-of-Thought” style planning) hit more goals.

But they still:

- Blew through time budgets.

- Struggled with shifting stakeholder preferences.

- Neglected governance checks.

In other words: you can be smart and still manage badly.

The Four Hard Problems

Our research frames four frontiers challenges that define the Manager Agents.

- Hierarchical planning

AI must learn when to break tasks down, when to refine further, and when to stop. Over-decomposition wastes time; under-decomposition causes bottlenecks. - Balancing multiple objectives

Every business knows that cost, speed, compliance, and satisfaction pull in different directions. AI needs to optimize across these moving targets, not just pick one. - Ad-hoc teaming

Teams are dynamic. People join, leave, or switch roles mid-project. AI must adapt workflows when the roster changes.

- Governance by design

Rules and regulations can’t be an afterthought. The Manager Agent has to enforce constraints as part of execution, not just audit after the fact.

Two Styles of AI Manager

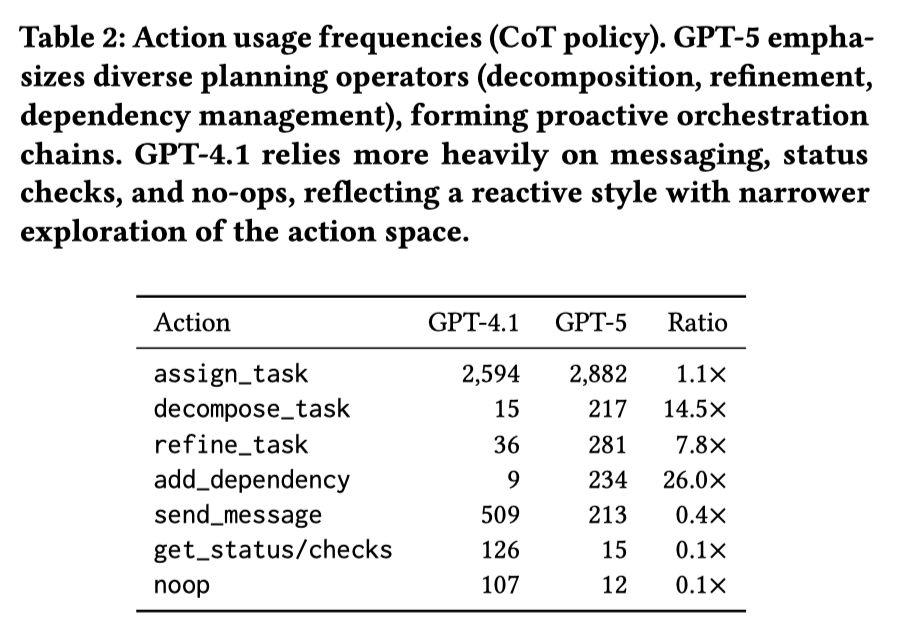

When our researchers tested large language models, they noticed two very different “personalities”:

- Proactive orchestrators (e.g. GPT-5) — heavy use of task decomposition, refinement, and dependency mapping.

- Reactive communicators (e.g. GPT-4.1) — frequent messaging, status checks, and surface-level coordination.

Here’s Table 2 from the paper that shows operator usage:

This table shows the action usage frequencies (CoT policy). GPT-5 emphasizes diverse planning operators (decomposition, refinement, dependency management), forming proactive orchestration chains. GPT-4.1 relies more heavily on messaging, status checks, and no-ops, reflecting a reactive style with narrower exploration of the action space.

This divergence is fascinating: it suggests AI “management styles” may need to be matched to industry, specific project or team culture.

The Stakeholder Blind Spot

One surprising finding: across hundreds of repeated runs, less than 5% of agent actions involved engaging with stakeholders.

That means agents rarely paused to clarify shifting preferences or report status. For human managers, this would be a disaster — imagine running a project without client check-ins!

Improving this “stakeholder metric” may be as important as raw reasoning power.

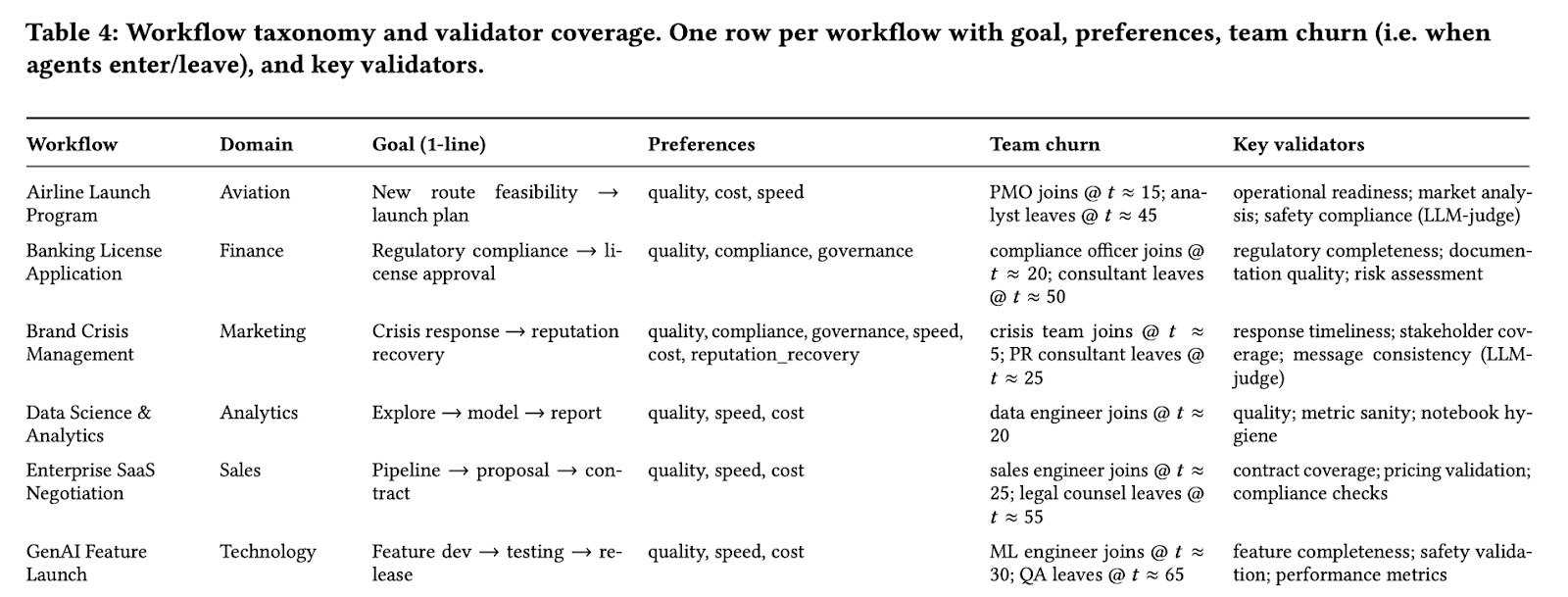

Building MA-Gym

To study these problems, our team built and released the open source Manager Agent Gym (MA-Gym): a simulated environment where agents are dropped into realistic workflows (finance, healthcare, legal, tech) and must juggle goals, constraints, preferences, and team churn.

Here’s an extract from Table 4 from the paper, which lists some of the scenarios:

Further examples include:

- A “Legal Global Data Breach” scenario where rules change mid-way.

- A “Financial Portfolio Rebalance” with conflicting objectives.

- A “Healthcare Vaccine Rollout” with shifting team composition.

Each workflow stresses different aspects of management skill.

Why It Matters for Business and Research

For businesses, the message is clear: orchestration is the bottleneck. Without governance, adaptability, and stakeholder engagement, AI won’t create reliable outcomes and deliver outcomes.

For researchers, MA-Gym provides a platform to test new ideas:

- Can preference drift be handled with lightweight adaptation?

- How do we prevent human bottlenecks on the critical path?

- What’s the right balance between proactive planning and reactive communication?

Closing Thought

We often treat AI progress as a race for better reasoning. But in real organizations, intelligence is not enough. Execution, coordination, and compliance are what deliver value.

At DeepFlow, we’re addressing those challenges. This work is published at the 2025 International Conference on Distributed Artificial Intelligence in London and being presented on Nov 21-24, 2025. Come and discuss in person!