How on-policy reinforcement learning holds on to the past

At this week's ML reading group at DeepFlow, we found ourselves circling back to one of the oldest headaches in machine learning: catastrophic forgetting. Why do some fine-tuning methods preserve a model's knowledge, while others wipe the slate clean? The paper RL's Razor by MIT's Improbable AI Lab provides an answer: look at the KL divergence, and you’ll see why.

Why this paper caught our attention

Catastrophic forgetting, which is the tendency for foundation models to lose prior capabilities when fine-tuned on new tasks, is a roadblock to building long-lived, adaptive systems. For a platform like DeepFlow, which orchestrates multi-agent workflows and increasingly leans on continual learning in production pipelines, understanding how to mitigate forgetting is critical.

RL's Razor argues that by measuring and minimizing the forward KL divergence from the base model to the fine-tuned model, we can predict and prevent catastrophic forgetting. The paper compares supervised fine-tuning (SFT) and on-policy reinforcement learning (RL) across language and robotics tasks and observes a striking pattern: at matched new-task performance, RL retained much more of the model’s prior skills than SFT.

What the paper claims

The paper makes three key contributions:

- Empirical gap between RL and SFT

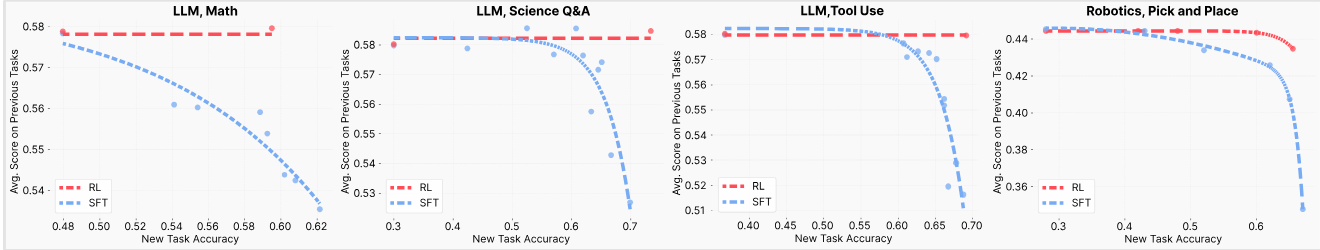

Across three LLM tasks (math reasoning, science Q&A, tool use) and a robotics pick-and-place benchmark, RL models as opposed to SFT models achieved comparable accuracy on new tasks while preserving significantly higher scores on old tasks. The results are visualized through Pareto frontiers (figure below), showing the trade-off between new-task performance (x-axis) and prior-task retention (y-axis). RL's frontier consistently dominates SFT's—achieving better balance. - The Forgetting Law

Forgetting correlates strongly with forward KL divergence between the base and fine-tuned model on new-task data. In quantitative terms: forward KL achieved an R² ≈ 0.96, making it the strongest predictor of forgetting. Competing measures (reverse KL: 0.93, total variation: 0.80, L2 distributional shift: 0.56, weight change norms: ~0.34–0.58) fell short. - The principle of RL's Razor

Among all high-reward solutions to a new task, on-policy RL implicitly prefers those that stay closest in KL divergence to the original model. By continually sampling from its own distribution, on-policy RL biases updates toward KL-conservative paths, reducing catastrophic forgetting.

A toy case (Parity-EMNIST) confirmed the mechanism: some solutions retain both old and new knowledge if they minimize forward KL, while others "forget" digit classification entirely.

Figure: Pareto frontiers of RL and SFT show that RL consistently retains prior-task performance while learning new tasks, whereas SFT trades old skills for new accuracy

Why it matters for DeepFlow

- Monitoring: During fine-tuning of workflow models, we could track forward KL as a proxy for forgetting risk—without needing to re-test on every prior benchmark.

- Curriculum learning: At DeepFlow, we want models to act as reliable workers, performing tasks optimally while optimizing for the overall workflow reward. Achieving this is multi-aspect. A model may need to learn constraint adherence, tool calling within our scaffold, or other specialized behaviors. This is best approached as a multi-step curriculum, where each stage builds progressively on the last.

- Avoiding forgetting in multi-step training: A key challenge with curriculum learning is ensuring that each new round of training does not overwrite what came before. RL's Razor shows that on-policy reinforcement learning, by biasing toward KL-minimal solutions, is much better at preserving prior knowledge across steps than supervised fine-tuning. For us, this highlights that if we want to build long-lived, multi-skilled agents, RL-based methods may be the safer route for sequential training.

Closing reflections

The beauty of RL's Razor lies in its simplicity: forgetting is not a mystery—it is a function of how far you stray from where you started. On-policy RL stays close, while SFT often wanders.

For those of us thinking about continual-learning workflows, this paper offers a useful intuition: stability often comes from methods that minimize unnecessary distributional shifts. It's not a prescriptive rule, but in practice, this means keeping an eye on forward KL—not just accuracy—when building adaptive AI.

If Sutton gave us "the Bitter Lesson," RL's Razor offers a sweeter one: If foundation models are to act as lifelong collaborators, we need them not just to learn new tricks, but to remember the old ones. RL's Razor sharpens that path.

This post is part of DeepFlow's ML Reading Group series, where we share reflections on the latest AI research and its impact on workflow automation.